What People Think AI Engineers Do (vs Reality)

What People Think AI Engineers Do (vs Reality)

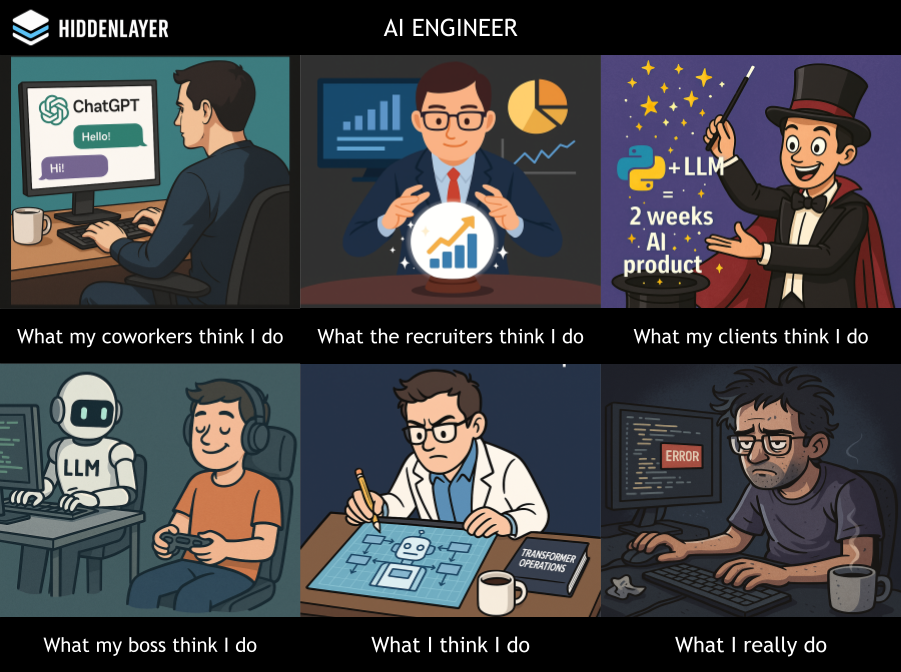

We’ve all seen those memes that contrast “What people think I do vs What I really do.”

So I made one for the AI Engineer role, because honestly, the misconceptions are getting out of hand.

Let’s break them down:

🧠 What my coworkers think I do

“Talk to ChatGPT all day.”

To be fair, this happens. But it’s not the core of the job. Prompting is not engineering.

📈 What my clients think I do

“Use a crystal ball to generate magical business insights.”

Expectations are often sky-high. They want dashboards powered by LLMs, instantly, with zero context or data cleanup.

🎩 What recruiters think I do

“LLM + Python + 2 weeks = AI product.”

The classic underestimation of the real complexity behind even the smallest deployment.

🕹️ What my boss thinks I do

“Let the LLM do the work while I play games.”

Automation is powerful, but someone still has to design, test, monitor, and fix it at 2AM…

🤖 What I think I do

“Architect AGI with fine-tuned transformer ops.”

Let me dream. Sometimes, I do feel like I’m building the future.

🧟 What I really do

“Debug prompt injection in a legacy system at 2AM.”

This is the most honest panel of them all.

Dirty data, undocumented code, unpredictable LLM behavior, welcome to AI in production.

💬 Final thoughts

The AI Engineer role is still misunderstood — often romanticized, underestimated, or oversimplified. It’s not just about prompts, or training GPT clones. It’s engineering: systems, pipelines, latency, logging, versioning, and everything in between.

If you’re getting into this field, or working in it and feeling frustrated: you’re not alone.

This blog exists to share that reality. Every week. With code, insights, failures and lessons.

Let’s enhance the future of AI together.